USING AM RADIOS TO CREATE YOUR OWN THEREMIN-LIKE SOUNDS

I break down the science behind a technique to generate eerie, sci-fi-inspired tones—similar to a theremin—using nothing but AM radios, and show how you can experiment with it yourself to create unconventional sound effects.

Sound designers are always looking for new ways to create unique textures, and AM radios offer an unconventional method for crafting eerie, otherworldly sounds. By exploiting the principles of heterodyning- a phenomenon where two frequencies interact to produce a third frequency (the "beat frequency")- and feedback, you can produce dynamic tones reminiscent of the theremin, an instrument famous for its haunting and alien sound.

The theremin has been a staple in sci-fi and horror soundtracks, helping to shape iconic atmospheres. Let’s explore how you can replicate this sound with AM radios and draw inspiration from its use in film and television.

The Science Behind the Sounds

AM radios work by tuning into invisible waves in the air called radio frequencies. When multiple radios tuned to slightly different frequencies are placed near each other, their signals mix and interfere, creating a new sound called a beat frequency. This process, combined with feedback loops and environmental factors like device proximity, generates the expressive, theremin-like tones you can manipulate in real-time.

Why This Resembles a Theremin

A theremin produces sound by mixing two high-frequency oscillators to create an audible beat frequency, and you control the pitch and volume by interrupting the fields around its antennas. The interaction of the radios mimics this mixing of frequencies, and your movements can influence the "field" in a similar way.

It’s an intriguing example of how everyday devices can unintentionally produce musical phenomena!

Theremin in Film and Television

The theremin’s eerie sound has long been used in entertainment to evoke mystery, otherworldliness, or humor. Here are some standout examples that demonstrate the theremin’s versatility, from suspenseful and eerie to campy and fun.

1. Doctor Who (1963–present)

The theremin-like tones in the iconic Doctor Who theme helped establish the show’s alien and futuristic tone. This atmospheric effect was achieved with early synthesizers, but you can recreate a similar mood using AM radios. Experiment with tuning and feedback to generate sounds that feel as vast and mysterious as time and space itself.

2. Star Trek: The Original Series (1966–1969)

While the theremin wasn’t heavily featured, Star Trek used similar electronic tones to highlight alien worlds and futuristic technology. By arranging AM radios in unique patterns and fine-tuning their frequencies, you can create soundscapes that echo the series’ exploration of the unknown.

3. It Came from Outer Space (1953)

A quintessential example of the theremin’s role in 1950s sci-fi, this film used the instrument to signify alien presence and tension. AM radios can mimic these haunting tones, allowing you to capture the spirit of classic science fiction with minimal equipment.

4. Mars Attacks! (1996)

Danny Elfman’s playful homage to 1950s sci-fi soundtracks prominently featured the theremin to comedic effect. Similarly, AM radios can produce exaggerated, retro-futuristic tones, perfect for parodying or paying tribute to this distinctive style.

How to Create Your Own Theremin-Like Sounds

Gather Your Materials:

Three AM radios

A quiet space with minimal interference

Recording equipment

Tune the Radios

Find static-filled stations on all three radios

Start by tuning all three radios to stations with static or minimal interference (no clear music or talking). This ensures the frequencies will interact cleanly without external noise dominating the mix.

Create Different Pitches

Slightly offset the frequencies of the radios. For example, if one radio is set to 600 kHz, tune the second to 602 kHz and the third to 604 kHz.

The pitch of the sound depends on the difference between the frequencies of the radios. A smaller difference (e.g., 600 kHz and 601 kHz) will create a lower, slower "wobble," while a larger difference (e.g., 600 kHz and 605 kHz) will produce a higher-pitched, faster tone.

Arrange and Experiment

Try positioning the radios in different arrangements such as a triangle or line.

Move them closer together or adjust their tuning to explore new sounds, listening to how the tones interact. Fine adjustments can produce subtle variations in pitch, creating complex and evolving sounds.

Wave your hands or objects near the antennas to manipulate the electromagnetic fields, creating dynamic pitch and tone shifts.

Record and Enhance

Capture your session with a microphone or direct recording. Use software to add reverb, delay, or other effects to deepen the atmosphere.

Applications in Sound Design

AM radio techniques are perfect for:

Sci-Fi Projects: Create alien communication tones, spaceship hums, or ambient drones inspired by classics such as Doctor Who or Star Trek.

Horror: Generate ghostly and unsettling soundscapes reminiscent of It Came from Outer Space.

Comedy or Parody: Craft exaggerated, retro-futuristic tones like those in Mars Attacks! for a playful nod to classic sci-fi.

By drawing inspiration from the theremin’s rich history in film and television and experimenting with AM radios, you can craft unique sound effects that stand out in your projects. Whether you're building tension, evoking mystery, or just having fun, this technique offers endless creative possibilities.

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

EAR TRAINING FOR SOUND DESIGNERS AND AUDIO ENGINEERS: ESSENTIAL SKILLS FOR POST-PRODUCTION

I go into why ear training matters, how it improves efficiency and collaboration in post-production, and the top three tools to refine your skills—whether you’re a beginner or a seasoned pro.

For sound designers and audio engineers, critical listening is the backbone of creating great mixes and sound effects. Whether you're tuning an EQ, crafting a reverb, or balancing a mix, having finely-tuned ears can make all the difference. A well-calibrated system is only as good as your ears, and while proper room treatment and tuning are essential, developing your ability to hear and interpret sound accurately is equally crucial. Ear training helps you identify frequencies, recognize subtle details in sound, and make informed decisions during production. Here’s an overview of how ear training can improve your craft and the top three programs across budget levels to help you refine your listening skills.

Why Ear Training is Crucial

Train your ears like a pro!

Developing critical listening skills not only improves your technical abilities but also saves time and budget in the production process. Being able to quickly identify problematic frequencies or subtle flaws with production audio reduces the time spent chasing down issues during post production. This efficiency leads to smoother sessions and happier clients, as revisions and reworks are minimized. Additionally, well-trained ears allow you to make confident creative decisions, ensuring your production achieves its desired impact without over-reliance on guesswork or endless iterations.

Ear training isn’t just for sound designers—it’s equally beneficial for directors, editors, and producers involved in post-production. When everyone on the team has a shared understanding of sound and terminology, communication becomes more efficient and productive. A producer who can articulate, for example, that dialogue sounds boxy around 300 Hz or that a background ambience feels overly bright above 8 kHz helps audio engineers address issues faster and more effectively. This shared language reduces misinterpretation and fosters a more collaborative and streamlined workflow.

Ear training enhances your ability to:

1. Identify Frequency Ranges: Recognizing frequencies allows you to pinpoint problem areas in dialogue, effects, or music and address them efficiently.

2. Distinguish Tonal Characteristics: Understanding how different audio elements sound helps create balance and depth in the mix.

3. Detect Subtle Changes: Being able to hear subtle EQ adjustments or phase issues ensures a polished, professional final product.

4. Improve Translation Across Systems: Critical listening ensures your mixes sound great on various playback systems, from cinema speakers to sound bars.

Ear Training Programs for Every Budget

Now that I’ve established why ear training is essential, the next question is: how do you train your ears effectively? While practice with real-world projects is valuable, targeted tools and programs can accelerate your growth by providing structured exercises and immediate feedback. These programs simulate the challenges you’ll face in post-production, such as identifying problematic frequencies, balancing sound design elements, and fine-tuning your effects chain. Whether you’re a beginner or a seasoned professional, investing in the right ear training tool can help sharpen your critical listening skills and take your audio work to the next level. Here are three top-rated programs across different budget levels to fit your needs and goals.

Free Option: SoundGym

Website: SoundGym

Overview: SoundGym offers a robust suite of ear training exercises designed specifically for audio engineers and sound designers. With a free account, you gain access to games that improve your ability to recognize frequencies, dynamics, and stereo imaging.

Benefits:

Interactive Games: Games like EQ Mirror and Filter Expert focus on identifying specific frequency boosts and cuts.

Progress Tracking: Track your skill growth over time with detailed statistics.

Community Engagement: Compete with other users and join forums for shared tips and feedback.

Why It’s Great for Beginners: SoundGym is accessible and user-friendly, making it ideal for those starting their ear training journey. The gamified approach keeps learning fun and engaging.

Mid-Cost Option: TrainYourEars EQ Edition

Price: ~$99

Website: TrainYourEars

Overview: TrainYourEars EQ Edition is a software specifically focused on helping you understand and recognize EQ adjustments. By listening to audio samples with applied EQ changes, you’ll learn to identify frequency ranges with precision.

Benefits:

Customizable Training Sessions: Tailor the exercises to your skill level and specific goals.

Practical Applications: Mimics real-world scenarios you’d face during mixing and mastering.

Detailed Feedback: Immediate feedback helps you understand mistakes and improve faster.

Why It’s Perfect for Intermediate Users: This program bridges the gap between beginner exercises and real-world mixing. It’s particularly useful for engineers aiming to fine-tune their frequency recognition skills.

High-Cost Option: Golden Ears by Dave Moulton

Price: ~$300

Website: Golden Ears

Overview: Created by renowned audio engineer Dave Moulton, Golden Ears is an intensive ear training program designed for professionals. It comes as a set of CDs or downloadable files featuring exercises in frequency recognition, dynamic range, and sound timbre.

Benefits:

Comprehensive Curriculum: Covers all aspects of audio, from basic frequency recognition to advanced critical listening.

High-Quality Audio Examples: Real-world examples and professional-level recordings ensure accurate learning.

Proven Results: Used by audio degree programs and industry professionals alike to hone their critical listening skills.

Why It’s Ideal for Pros: This program is for those who are serious about mastering critical listening. It’s thorough, challenging, and provides the depth required for professional growth.

If you’re truly dedicated to advancing your skills, investing in Golden Ears is a worthwhile decision.

While the upfront cost is higher, the Golden Ears program’s comprehensive training ensures you’ll gain the ear training expertise needed to tackle even the most demanding post-production challenges. Whether it’s recognizing subtle tonal differences or crafting the perfect sonic balance, Golden Ears equips you with quality training to elevate your work to a high professional standard. Serious skills require serious tools—and Golden Ears delivers exactly that.

How to Incorporate Ear Training Into Your Routine

Develop a routine that includes 15–30 minutes of ear training each day.

Daily Practice: Dedicate 15–30 minutes each day to ear training. Consistency is key! I found that pairing ear training with a morning routine helped me stay consistent, setting the tone for a productive day.

Apply What You Learn: Test your skills in real-world scenarios while mixing or sound designing. After becoming proficient with my ear training, the biggest thing I've noticed is how knowing what frequency to EQ without resorting to sweeping to zone in on the problem will not only improve your speed but will also prevent you from over-EQing, resulting in more natural results as well.

Experiment with Different Tools: Use reference tracks and A/B testing to develop your critical listening further. Tools like spectrum analyzers or ear training apps can give you real-time feedback, making it easier to spot patterns and progress. Fabfilter’s ProQ RTA was super helpful for me as I was beginning.

Stay Patient: Ear training is a gradual process. Celebrate small victories along the way!

Conclusion

Developing your critical listening skills is an investment in your craft. Whether you’re just starting out or are an experienced professional, ear training can take your audio work to the next level. By incorporating tools like SoundGym, TrainYourEars, or Golden Ears into your routine, you’ll build the skills needed to create mixes that not only sound great but translate across all systems.

Start training your ears today, and unlock the full potential of your audio projects!

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

AFFORDABLE ULTRASONIC MIC? SONOROUS OBJECTS SO.104 REVIEW WITH AUDIO SAMPLES

I review the Sonorous Objects SO.104, an affordable ultrasonic microphone that’s perfect for field recording and sound design

Introduction

In my previous post on ultrasonic microphones, I mentioned ordering a stereo pair of the Sonorous Objects SO.104 after reviewing its promising specifications. Now, after some hands-on testing, I’m sharing my insights into how these microphones perform in real-world scenarios. While this review isn’t a comprehensive teardown with intricate measurement data, it offers a practical look at how the SO.104 performs in typical use cases. With example recordings and real-world observations, I’ll explore whether this mic delivers on its promise as an affordable ultrasonic option for field recording and sound design.

First Impressions of the Sonorous Objects SO.104

Unboxing Experience

The Sonorous Objects SO.104 arrived in simple, no-frills packaging: a small cardboard box with each item securely bubble-wrapped and placed in individual plastic bags. A slip of paper included the microphones’ sequential serial numbers, suggesting they are paired by production order to reduce costs. While this approach can work well due to consistent materials and assembly, it’s worth noting that no two microphones are ever perfectly identical. For critical applications like stereo recording of orchestras or drum overheads, even minimal differences can affect the stereo image. However, at this price point, I wouldn’t expect the level of quality assurance seen in high-end or boutique microphones.

Spaced pair of SO.104 omnis

Build Quality

The SO.104 features a sturdy (3D-printed?) housing that encases the Primo EM258 capsule, mounted on a high-quality Neutrik XLR connector. This capsule is known for its wide frequency response (20 Hz to 70 kHz), making it ideal for capturing both audible and ultrasonic frequencies. Key specifications include:

Sensitivity: -32 dB

Self-noise: 20 dBA

Signal-to-noise ratio: 74 dB

Maximum SPL: 115 dB

This combination allows the SO.104 to capture intricate audio details, whether it’s subtle environmental sounds or audio that will be pitch-shifted. The low noise floor further enhances its usability for field recording and sound design.

Design and Aesthetics

Compact and lightweight, the SO.104 is easy to integrate into most setups with its standard XLR connector. However, the foam windscreens from the SO.100 series require mic clips to clamp low on the mic body, near the connector. Aesthetically, the microphones feel more like prototypes or DIY builds, with a somewhat unfinished look—not a dealbreaker, but worth noting for those who value polished design.

Setup and Accessories

The SO.104’s compact design ensures easy setup in both studio and field environments. While Sonorous Objects offers some accessories, I needed to source a stereo bar and weatherproof hard case elsewhere. This might be a minor inconvenience for those looking for a complete kit. Additionally, the included foam windscreens didn’t fit snugly, so I wouldn’t recommend them for outdoor use.

Performance Evaluation

Sound Quality: The Sonorous Objects SO.104 offers a natural sound profile typical of small-diaphragm condenser (SDC) omnidirectional microphones. The mics handle dynamic range well, with minimal distortion or clipping, even at high sound pressure levels (SPL). Thanks to the low self-noise of the Primo EM258 capsule, recordings maintain clarity, capturing intricate details like subtle environmental sounds. If you’re seeking an affordable mic for ultrasonic recording, the SO.104 holds its own in capturing a broad frequency range.

Polar Patterns and Sensitivity: As omnidirectional mics, the SO.104 performs admirably across a wide sound field, with good off-axis response in the audible range. In typical use cases, such as recording ambient soundscapes, the mics deliver a balanced stereo image. Notably, when recordings are slowed to analyze ultrasonic content, the microphones reveal natural tonal shifts and nuanced textures, showcasing their ability to capture ultrasonic frequencies effectively.

Overall Observations

The SO.104 offers a flat, natural response across the audible range, consistent with what you’d expect from SDC omnis. Though not laboratory-grade in precision, the SO.104’s sensitivity and broad frequency capture make it a valuable tool for field recording for sound designers, especially when capturing sounds for extreme downward pitch-shifting.

Microphone Data

Comparison with SE8 Matched Cardioid Pair

In my previous setup, I relied on a matched cardioid pair of SE8 for capturing stereo recordings, which are well-regarded for their flat, natural sound and excellent performance in a variety of recording scenarios. The SE8 microphones are known for their precise off-axis rejection due to their cardioid polar pattern, which is ideal for capturing focused sound sources while minimizing unwanted noise from the surroundings. Their frequency response is fairly neutral across the audible range, with a slight high-frequency boost, delivering a transparent, uncolored sound ideal for critical recordings.

SE8 Matched Cardioid Pair Specs:

Polar Pattern: Cardioid

Frequency Response: 20 Hz - 20 kHz

Sensitivity: -35 dB

Self-Noise: 16 dBA

Max SPL: 136 dB

Condenser Membrane Size: 6 mm

The SE8 mics are generally considered versatile and flat in their performance, making them a reliable choice for a wide range of recording tasks, from vocals to ambient recordings. The cardioid pattern isolates sound effectively, offering great detail in the center while rejecting off-axis sounds. In my testing, I was surprised to see these mics are capable of recording detail up to around 30kHz.

How the SO.104 Specs Compare to the SE8

The Sonorous Objects SO.104, on the other hand, brings a new dimension to the table, specifically targeting those interested in ultrasonic frequencies. While the SE8 is a more conventional choice for general-purpose recording, the SO.104 excels in capturing both audible and ultrasonic content, making it a unique tool for field recording and sound design.

Sonorous Objects SO.104 Specs:

Polar Pattern: Omnidirectional

Frequency Response: 20 Hz - 70 kHz

Sensitivity: -32 dB

Self-Noise: 20 dBA

Max SPL: 115 dB

Condenser Membrane Size: 5.8 mm

Compared to the SE8, the SO.104 offers a wider frequency range, extending into the ultrasonic spectrum (up to 70 kHz). This makes the SO.104 a great choice for capturing fine details in both the audible and ultrasonic ranges that the SE8 cannot. However, the SO.104 sacrifices some of the SE8’s isolation benefits, as the SO.104 features an omnidirectional pattern, which can pick up more ambient noise and is ideal for capturing a broader soundscape.

It is worth noting that omnidirectional microphones sound more natural than cardioids because they capture sound from all directions, similar to how human ears hear, and don’t exhibit the proximity effect caused as a result of the way cardioid microphones focus on sound from the front- at lower frequencies, longer (lower frequency) wavelengths of sound become less directional. As a result, the rejection of low-frequency sounds from the sides and rear is less effective, which contributes to the proximity effect of cardioid microphones.

The SO.104’s sensitivity is slightly lower at -32 dB, meaning it’s less sensitive than the SE8 (-35 dB), but this doesn’t impact its ability to capture nuanced sounds, especially in the ultrasonic range. Additionally, while the SO.104 has a larger condenser membrane size (5.8 mm vs. 6 mm for the SE8), the difference is minor, though it may affect how each mic responds to transients and subtle textures. In my testing, I was surprised to see these mics are capable of recording detail up to and possibly exceeding 96kHz.

Overall, the SO.104 offers a unique feature set with its ability to capture ultrasonic frequencies, making it an exciting choice for those seeking to explore the high (frequency) end of the spectrum on a budget.

Real-World Examples and Data

To better demonstrate the SO.104’s ultrasonic capabilities, I’ve included slowed-down audio recordings in various contexts. These environmental and foley recordings show how ultrasonic details emerge when the mic captures frequencies above the audible range for typical sound design use cases. When slowed, even the tiniest nuances become apparent, further emphasizing the mic’s ability to reveal hidden layers of sound. When slowed down significantly- two octaves or more- recordings made with mics that do not have a high frequency extension into the ultrasonic range begin to sound muffled and dull.

Graphs and data collected from these examples will be shared below, where you'll see how the frequency response translates to real-world recordings.

Screenshot of the foley session comparing the SO.104 (top pair) and SE8 (bottom pair) reveals the difference in the two mics ultrasonic responses. While the SE8 perform admirably, they simply do not have the high frequency response required for extreme pitch shifting.

Example Recordings

SO.104 Recordings (Before)

SO.104 voice recordings before down pitching showing lots of ultrasonic content above 20kHz

SO.104 Recordings (After Down-pitching)

SO.104 voice recordings after down pitching 2 octaves showing lots of detail remaining up to 20kHz, resulting in a convincing pitch shift.

SE8 Recordings (Before)

SE8 Recordings (After Down-pitching)

SE8 voice recordings after down pitching two octaves are missing detail in the upper frequencies approaching 20kHz, resulting in a muffled and dull pitch shift.

Conclusion and Final Thoughts

The Sonorous Objects SO.104 proves to be a capable and affordable ultrasonic microphone, offering quality performance at its price point. While it may not compete with high-end microphones in terms of build quality or advanced quality assurance testing, the SO.104 delivers solid results for both field recording and sound design. Its compact design, low self-noise, and wide frequency response make it an excellent option for capturing subtle environmental sounds and ultrasonic frequencies.

For users with a limited microphone budget, the SO.104 is a valuable addition to any mic locker, especially when working with ambient recordings or sound effects that require detailed capture of high-frequency audio. It’s an ideal choice for those looking to explore ultrasonic recording without breaking the bank.

While the ill-fitting SO.100 series foam windscreens may not be the best choice for outdoor use and additional accessories like a stereo bar and weatherproof hard case need to be sourced separately, the Sonorous Objects SO.104 provides great value for its price. Whether you’re a professional sound designer or an enthusiast, this mic is a unique tool that delivers performance where it counts.

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

UNLOCKING EFFICIENCY IN YOUR SOUND KIT: THE POWER OF THE Z-CABLE

I share why the Z-cable is my go-to solution for simplifying audio connections and boosting efficiency. I also walk you through how to build your own Z-cable, tailored to your setup needs.

When you're setting up for live sound or location audio, the last thing you want is to be digging through a bag of adapters and connectors, trying to find the right one. Enter the Z-cable—one of my favorite compact, customizable, problem-solving tools that audio engineers can use to streamline their kit and workflow in time-sensitive situations. This post will dive into what a Z-cable is, why it's invaluable for sound engineers, and guide you on how you can make your own.

What is a Z-Cable?

At its core, a Z-cable is an adaptable audio connector tool designed to help audio engineers connect different equipment without needing multiple adapters or “turnaround” barrels. A typical Z-cable has a combination of connector options on each end, allowing for instant adaptability without extra bulk. In practice, this means fewer separate adapters, fewer cables, and a faster, more efficient setup.

A Z-cable is versatile enough to work as a female XLR-to-jack adapter, a male XLR-to-jack adapter, a Y-split cable, a gender reverser, or even a short patch cable. It’s a really useful tool to have in your kit, covering multiple needs with just one cable.

Be sure that when using your Z-cable, you do not combine 2 outputs or double phantom power. The design I will outline uses a female TRS connector with male-to-male TRS barrel adapter to prevent accidental tip contact that would be possible with a male TRS design.

Why Us Audio Engineers Love Z-Cables

Z-cables are designed to be a flexible and efficient solution for real-time audio needs. Here’s why they’re a favorite among live sound and location audio engineers:

Space-Saving: Forget about cramming separate male-to-female and female-to-male adapters into your bag. A single Z-cable takes the place of several adapters, minimizing bulk and saving valuable space.

Increased Flexibility: In the fast-paced world of live sound, connections may need to change on the fly. With a Z-cable, you can instantly switch between different connector types and genders, reducing setup time and troubleshooting stress.

Cleaner Setups: Keeping cables organized is essential to maintaining clear sound and avoiding signal interference. Z-cables combine adapters into a single, streamlined unit, reducing cable clutter and allowing you to always find the right adapter.

Quick Problem-Solving: When something goes wrong, every second counts. With a Z-cable, you have built-in versatility to adapt connections quickly and efficiently.

DIY Guide: Building Your Own Z-Cable

If you're a hands-on audio engineer, building a Z-cable can be a simple yet rewarding project that lets you create something entirely customized to your unique setup. When I decided to make my own, I wanted a solution that would cut down on adapter clutter and be built to my own specifications. I spent around $30 on materials from Redco Audio and a couple of hours working on it and testing each stage to ensure it was as reliable and durable as I needed.

Here's my step-by-step guide, based on what I learned building my own Z-cable. If you’re ready to dive in and get a personalized, problem-solving addition to your kit, this guide will walk you through the process!

Materials Needed

XLR Male Connector (2) such as Neutrik NC3MXX

XLR Female Connector (2) such as Neutrik NC3FXX

TRS Female Connector (1) such as Neutrik NJ3FC6

TRS Gender Changer (1) - male-to-male (optional)

High-Quality Microphone Cable (you will need about 5 feet in order to cut and strip ends to 4, 1 foot lengths of cable). I went with Mogami W2552.

Soldering Iron and Solder

Heat Shrink Tubing and Heat Gun (optional, for added durability and insulation. Electrical tape can be used but will not be as reliable and leave sticky residue).

Step 1: Prepare the Cable

1. Start by cutting your cable to the desired length. For a compact Z-cable, 6–12 inches usually works well (be sure to leave enough length to work with your connectors).

2. Strip back the insulation at each end of the cable to expose the wires. You should see three wires inside: typically red (positive), white (negative), and a bare copper ground wire. Be careful not to over-expose the bare wire which can lead to shorts and other issues. Heat shrink tubing greatly reduces these pitfalls.

Step 2: Attach the Connectors

Before you start soldering, remember to place your heat shrink tubing and any other add ons such as strain relief in place on the cable!

1. Connect the male XLR connector to one end of the first length of cable and the female XLR connector to the other. Be sure to solder the wires correctly to the pins: Pin 1 to ground, Pin 2 to positive, and Pin 3 to negative.

2. Attach the next length of cable to the same female XLR connector, and attach a male XLR connector to the opposite end. Be sure to keep your wires matched up to the pins correctly.

3. Attach the 3rd length of cable to the second male XLR connector you just attached, and attach the least female XLR connector to the opposite end, keeping your wires matched to the correct pins.

4. Attach the final length of cable to the female XLR connector you just attached, and attach the female TRS connector to the end, being sure your wires are connected to the correct pins: Sleeve to ground, Tip to positive, and Ring to negative. You may wish to attach/ tether a male-to-male TRS gender change barrel for additional flexibility.

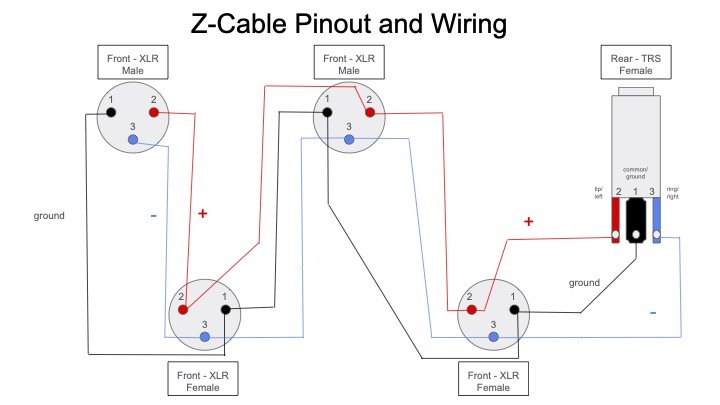

Whenever I am soldering cables, I like to have a pinout handy to avoid any crossed wires. Here is a custom pinout for this project:

The middle three connectors will each have two sets of wires soldered to the connection points. It may be helpful to twist these pairs together before tinning and soldering the connections.

Step 3: Test and Insulate

1. Before completing the build, test the cable with a cable tester such as the Behringer CT100 6-in-1 Cable Tester to ensure it’s correctly wired and functional.

2. Once continuity is confirmed, use heat shrink tubing around the connections for additional insulation and to reinforce durability.

Tips for Customization

You can make Z-cables with a variety of connector types depending on your equipment needs. For example, the design I've outlined would allow you to expand upon it further by adding an additional length of left and right XLR connections to the TRS connector end.

Using color-coded connectors or heat shrink tubing can help you quickly identify each cable’s function in complex setups.

For even more adaptability, you could create multiple Z-cables in various lengths for specific applications, such as in-studio, on-stage, or field use.

Integrating Z-Cables with Your Audio Kit

The Z-cable fits right in with other problem-solving tools in an audio engineer’s kit, such as:

Inline Pads/Attenuators: These devices help you control high-level signals, and a Z-cable can seamlessly integrate with them to expand your options.

DI Boxes: Combining a Z-cable with a DI box lets you adapt connections for both balanced and unbalanced signals, making them more flexible in the field.

Signal Splitters and Combiners: Z-cables reduce clutter when used with splitters, making quick adjustments to the setup smoother and keeping everything clean and organized.

Wrapping Up

Whether you’re doing live sound or setting up in the field, a Z-cable is a powerful tool to have on hand. It helps you adapt faster, reduce clutter, and simplify setups, all while saving space in your kit. Building your own Z-cable can be a fun and rewarding project, letting you tailor it precisely to your needs.

With a Z-cable in your bag, you’ll be ready for whatever surprises your audio setup throws your way. So, roll up your sleeves and get ready to make this essential tool for your kit—you won’t regret it!

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

THE HIDDEN WORLD OF ULTRASONIC SOUND: USING ULTRASONIC MICROPHONES IN SOUND DESIGN

I explore how ultrasonic microphones can capture details far beyond human hearing, opening up new possibilities for sound design. From high-end mics to budget-friendly options, I compare the best choices to help you find the right fit for your projects.

In sound design, capturing details beyond the ordinary spectrum of human hearing opens up new creative possibilities. Ultrasonic microphones, capable of recording frequencies well beyond the typical 20 kHz range, allow sound designers to tap into an unheard world of textures and unique sounds. In this post, we’ll explore how ultrasonic microphones are used in sound design and compare popular options across different budget ranges to help you choose the right one for your projects.

What Is an Ultrasonic Microphone?

An ultrasonic microphone is a specialized type of microphone that records frequencies far beyond what standard microphones can capture, often up to 100 kHz or more. This is especially useful for capturing high-frequency details from animals like bats, insects, or small mechanical sounds that produce ultrasonic tones. The resulting recordings can then be pitched down far greater in post-production to reveal nuanced sounds that bring new dimensions to sound design, often producing eerie, alien, or hyper-realistic textures.

It is important to keep in mind that a recorder capable of recording at a high sample rate of 96k or preferably 192k will be needed to capture ultrasonic frequencies.

How Ultrasonic Microphones Are Used in Sound Design

Capturing Inaudible Textures: Ultrasonic microphones allow sound designers to record sounds that are usually inaudible. These could include high-frequency elements of machinery, the flutter of insect wings, or even ultrasonic emissions from certain materials. When slowed down, these sounds reveal intricate details and textures that enrich a soundscape.

High-Frequency Elements of Machinery: Imagine recording the ultrasonic frequencies produced by a spinning industrial fan. When slowed down, the sound could reveal rhythmic, pulsing tones with subtle metallic resonances, adding depth and realism to a scene set in a factory or sci-fi environment.

Flutter of Insect Wings: By capturing the high-pitched flutter of a dragonfly's wings with an ultrasonic microphone, you could slow down the recording to create a sound reminiscent of small, delicate engines or mechanical drones.

Ultrasonic Emissions from Materials: Certain materials, such as glass or metal, emit faint ultrasonic vibrations when under stress or when rubbed together. When slowed down, these sounds could serve as eerie atmospheres for horror or suspense scenes, adding an unsettling, almost imperceptible layer of tension.

Enhanced Sound Manipulation: By recording sounds at ultrasonic frequencies, designers can slow them down to create exaggerated and surreal effects, perfect for film, game design, or VR applications where exaggerated, hyper-real soundscapes enhance immersion.

Example: In the 2018 God of War video game, the World Serpent, also known as Jörmungandr, is a massive, mythical creature that embodies the ocean and plays a significant role in the game's narrative and atmosphere. To achieve the ancient creature's resonant, otherworldly voice, the sound designer pitch shifted human vocalizations and layeredit with grumbles and rattles of various animal recordings.

Comparing Ultrasonic Microphones Across Budget Ranges

If you’re interested in diving into ultrasonic sound design, choosing the right microphone is essential. Here, we’ll compare three ultrasonic microphones across budget levels: two industry standard options in the Sanken CO-100K (high-end) and Sony ECM-100U (mid-range), and newcomer Sonorous Objects’ SO.104 Ultrasonic Omni Microphone (budget).

1. Sanken CO-100K

Price: High-end, around $2,500

Frequency Range: 20 Hz – 100 kHz

Polar Pattern: Omnidirectional

Connection: XLR

Best for: Professional sound designers, high-end studios, scientific research

Overview: The Sanken CO-100K is the gold standard for ultrasonic recording in sound design. With its ultra-wide frequency range extending up to 100 kHz, it captures high-fidelity ultrasonic sounds with remarkable detail and precision. Built for high-end studios and demanding production environments, the CO-100K is perfect for those needing extreme accuracy and depth, whether recording fine textures or using pitch-shifting techniques to unveil hidden details. Its dual-capsule design allows for a wider, more forgiving polar pattern at extreme high frequencies.

Pros:

Exceptional frequency range (20 Hz – 100 kHz)

High build quality for durability and reliability

Cons:

High cost, suited only for studios or sound designers with large budgets, though you may be able to find one for rent.

2. Sony ECM-100U

Price: Mid-range, around $1,000

Frequency Range: 20 Hz – 50 kHz

Polar Pattern: Cardioid

Connection: XLR

Best for: Intermediate sound designers, mid-range studio setups

Overview: The Sony ECM-100U is an excellent mid-range option for those interested in high-frequency sound capture without the hefty price tag of top-tier models. While its frequency range caps at 50 kHz, it’s still more than sufficient for most ultrasonic applications, capturing detailed audio that can be slowed down and processed effectively. Its cardioid polar pattern makes it ideal for focused recordings.

Pros:

Strong frequency response up to 50 kHz, suitable for ultrasonic recording

Cardioid pattern ideal for isolating sound sources

Cons:

Limited upper frequency extension compared to higher-end models like the Sanken CO-100K. With this microphone or one with a similar frequency response, the recordings will hold up to less pitch shifting (however, if you cannot record at a sample rate above 96kHz to capture those frequencies, it will make little difference).

3. Sonorous Objects SO.104 Ultrasonic Omni Microphone

Price: Budget, around $100

Frequency Range: 10 kHz – 70 kHz

Polar Pattern: Omnidirectional

Connection: XLR

Best for: Entry-level sound designers, experimental setups, smaller studios

Overview: The Sonorous Objects SO.104 Ultrasonic Omni Microphone is a compelling choice for those on a budget, delivering a frequency range that rivals more expensive models. It’s a versatile and affordable option for those new to ultrasonic recording or anyone looking to experiment with ultrasonic capture without a major investment. With its XLR connection, the SO.104 is easy to integrate into professional setups, making it a unique offering at this price point.

Pros:

Impressive frequency range at an affordable price

Compact and portable for on-the-go recording

Cons:

Lower build quality compared to high-end models, which may result in decreased durability and a shorter lifespan, especially in demanding or frequent use, and may point to other inconsistencies in their production. However, the low comparative price may offset these risks.

May lack some off-axis detail offered by more premium options.

Which Microphone Should You Choose?

Cheap plant mic options such as the Zoom H5 are only capable of recording up to 20kHz. The SO.104 look to be a significant upgrade over the handeld’s mics.

For Professional and Scientific Use: The Sanken CO-100K is the best choice for high-end studios and professionals with the budget for top-tier detail and durability. If you're working on scientific research projects, like analyzing animal vocalizations or capturing fine material sounds at ultrasonic frequencies, the CO-100K's extended range makes it invaluable. It’s also ideal for complex sound design projects, as its 100kHz frequency extension allows for twice as much pitch shifting before detail loss compared to mics such as the Sony ECM-100U.

For Versatile Mid-Range Use: The Sony ECM-100U strikes a balance between affordability and quality, offering a solid frequency range and a cardioid polar pattern well-suited for focused recordings. If you're aiming to capture clear, isolated sounds without a high price tag, this microphone is a solid choice– especially for sound designers who need robust ultrasonic capabilities but with recording setups that don't support sample rates above 96 kHz.

For Budget-Conscious and Experimental Use: The Sonorous Objects SO.104 is a compelling choice for those exploring ultrasonic sound on a budget. If you’re new to ultrasonic recording or looking to experiment with capturing high-frequency sounds in less controlled environments, the SO.104 is an affordable, well-specced microphone that still covers an extensive ultrasonic range. Its low price point also makes it ideal for fieldwork or other such risky setups, like placing the mic near unpredictable wildlife or in rugged outdoor environments, where damage to the microphones might be expected and their affordability allows for greater experimentation.

Final Thoughts

Ultrasonic microphones unlock a world of sound that’s otherwise inaccessible, adding depth, texture, and mystery to your sound design projects. From capturing the calls of bats to exploring the rich overtones of mechanical sounds, ultrasonic recording brings an unparalleled range of creative possibilities. Whether you’re investing in a high-end setup with the Sanken CO-100K or experimenting with the Sonorous Objects SO.104, ultrasonic microphones offer unique tools to enhance and expand your creative toolkit.

Encouraged by the given spec data, I recently purchased my own matched stereo pair of Sonorous Objects SO.104. Stay tuned for an upcoming review, where I'll dive deeper into its performance and share insights from my hands-on experience, provide some measurement data, as well as some example recordings. With the right mic in hand, the hidden sounds of the world are yours to capture, manipulate, and transform.

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

THE ROLE OF RHYTHM AND TIMING IN SOUND DESIGN

I explore the fascinating world of sound design in film and how it can deviate from on-screen action to enhance storytelling.

Introduction

Sound design in film conveys meaning differently than imagery and must sometimes deviate from the action on screen to enhance the storytelling experience. Rhythm and timing play a crucial role in this aspect of sound design. Tighter micro-timing can make a sound feel powerful and advanced, while looser micro-timing can give the impression of something worn or damaged.

However, there are some notable exceptions where the sound design comes first. In the film "Baby Driver," the film was cut to the soundtrack, allowing the imagery to perfectly align with the sound design, leading to a very satisfying and cohesive edit. The music the main character listens to through the film is integral to both his driving as well as the overall narrative, and the effect is an incredibly tight and rhythmic groove that lets us feel the events of the film unfold from his perspective and underpins the emotional state of the main character.

Well-timed sound effects and music have a significant psychological impact, guiding the audience's emotions and attention. Despite exceptions like "Baby Driver", sound designers often must deviate from the visual imagery of the film.

Deviation from Visuals: An Artistic Choice

Sound does not always match on-screen actions, and this deviation is an artistic choice made by filmmakers and sound designers for various reasons:

Characterization: Enhancing the traits or state of a character.

Emotional Impact: Strengthening the emotional resonance of a scene.

Tension and Surprise: using space to create or enhance anticipation and suspense.

Guiding Attention: Directing the audience’s focus to specific elements or moments.

In-Depth Example: Thor’s Hammer Mjölnir Reassembling in "Thor: Love and Thunder"

Scene Description

You can check out the clip with commentary from the director here.

Thor stands amidst a chaotic battle, when he sees his long lost hammer being wielded by a mysterious warrior in Asgardian armor closely resembling his own. He calls Mjölnir to his aid, but it does not obey. The mysterious warrior commands the shattered pieces of Mjölnir to take out several enemies. They then return, whizzing past Thor and reassemble with a satisfying rhythmic magical energy.

Sound Design Analysis

Initial Silence: The scene starts with a chaotic battle against unknown foes. As we catch glimpses of Mjölnir, the battle sounds take a bit of a backseat to the sounds of the hammer, pulling our focus to highlight the most important element of the scene.

Magical Build-Up: A low hum begins, gradually increasing in volume and complexity. Build up like this are used to add anticipation and weight to what follows.

Rhythmic Pulses: Each piece of Mjölnir emits a distinct, rhythmic pulse and pitch as it locks into place. Note that these rapid, metallic impacts are similar but are all unique, avoiding the "machine gun" effect of repeated sounds.

Climactic Reassembly: The final pieces come together with a resonant, echoing boom, letting us know Mjölnir may be fragmented but it is no less powerful than before.

Deviation from Visuals

The interesting thing about this scene is that if you pay close attention you will notice that the sound of each piece reassembling does not coincide with the visuals. The imagery is wild and chaotic, with uneven timing between fragments, which also happen to outnumber the audible pings in the sound design, reinforcing the chaos of the battle. This contrasts with the even pinging of the sound design elements to highlight the heroic appearance of Mjölnir.

The magical hum and pulses are timed to build tension and emphasize the significance of Mjölnir’s reassembly, showing how it remains powerful despite being fragmented, while the final boom is slightly delayed from the rest, creating a dramatic pause that heightens the moment’s impact. Fewer pings than visible hammer fragments are also used as a way to avoid cluttering the sound scape with overlapping sounds, providing clarity of intention.

Advice for Sound Designers

Knowing when to deviate from visuals and implement this technique can be challenging. As a sound designer, consider the emotional and narrative goals of the scene. Ask yourself:

What does the sound design need to convey beyond the visuals?

What is the primary goal? To enhance the emotional weight, or highlight a key narrative moment?

Deviating from the exact timing of visuals can be effective at emphasizing emotional beats, creating tension, focusing attention, or even enhancing surrealism. In this instance, the way the sound design deviates from the visuals ultimately serves to build up to the reveal of the mysterious fighter wielding Mjölnir- Thor's former love Jane Foster.

Collaborate closely with the director and editor to understand their vision and experiment with different timings to see what feels most impactful. Trust your intuition and the feedback from initial screenings to fine-tune the sound design for the desired effect.

Conclusion

Rhythm and timing in sound design are essential for creating a compelling auditory experience in film. Deviations from visual timing can significantly enhance the viewer’s emotional engagement and overall experience. The impact of the feeling conveyed by the sound is often more important than strictly following the imagery. These 'mismatches' are hard to notice because they feel right and natural.

Next time you watch a film, pay close attention to the sound design and how it complements or deviates from the visuals to enhance the storytelling. You might be surprised at how much the sound influences your perception and enjoyment of the movie.

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

HOW TO CREATE BROADBAND ABSORBERS WITH ROCKWOOL: A STEP-BY-STEP GUIDE

Learn how to build cost-effective, professional-grade broadband sound panels using Rockwool insulation.

Introduction

1 of 14 identical panels I made for my home studio, saving hundreds including the cost of tools- the electric stapler was the real MVP.

When it comes to sound design and home studios, a well-treated space is paramount for achieving professional-grade results. Broadband absorbers are crucial for managing sound reflections, reducing reverberation, and improving overall acoustic quality in the room. By absorbing a wide range of frequencies, these panels help create a more controlled and pleasant sound environment.

However, pre-made panels can be prohibitively expensive, and a DIY approach can provide a cost-effective solution for achieving professional-quality acoustic treatment that not only meet your acoustic requirements but also reflect your personal style and creativity.

For those embarking on a DIY project, Rockwool is a highly recommended material due to its exceptional acoustic properties. It provides even broadband absorption, is easy to handle, safe, cost-effective, and offers additional benefits such as fire resistance and moisture control. While fiberglass and other materials can also be effective for acoustic treatment, and will work pretty interchangeably with this guide, Rockwool was the best choice for my specific use case.

Fiberglass is a great absorber but can be more challenging to handle safely due to its tendency to shed fine particles, which can be irritating to the skin and lungs.

Rockwool is more user-friendly and easier to cut and shape without excessive dust.

Rockwool’s price and availability happened to be better at the time of my purchase, so it is important to keep that in mind as you design your own panels.

Creating your own broadband absorbers not only allows for significant cost savings—often exceeding 50% compared to pre-made panels—but also enables customization in terms of size, depth, shape, and aesthetics. This guide will walk you through the process of constructing broadband absorbers using Rockwool insulation.

Cost Considerations

Get the best bang for your buck with custom DIY panels.

Creating your own broadband absorbers with Rockwool is not only a rewarding DIY project but also a smart financial decision. Let's explore the cost savings you can expect by opting for a DIY approach compared to purchasing pre-made panels.

Cost of Pre-made Panels

Pre-made sound panels typically range from $50 to $150 per panel, depending on the brand, size, and quality.

Cost of DIY Rockwool Panels

Rockwool Insulation: A pack of rockwool insulation (e.g. Safe'n'Sound) costs around $50-$70 and can typically make 6-12 panels, depending on the size and thickness.

Fabric: Acoustically transparent fabric costs around $5-$20 per yard, and you might need 1-2 yards per panel.

Wood Frame: Wood for the frame might cost around $10-$20 per panel.

Additional Materials: Miscellaneous materials like adhesive, screws, and mounting hardware might add an extra $5-$10 per panel.

So, the total cost for a DIY panel typically comes in at well under $50, allowing you to better treat your space.

Materials and Tools Required

Materials

Try to find lumber that is not warped, as this will make squaring corners difficult.

Insulation batting: I used 3” deep, 15.25x47” Safe'n'Sound batting

Lumber: 4x1 planks allow internal space for the batting and a flush center brace

Acoustic Transparent (AT) fabric: I used Guilford of Maine (plus cheaper tulle backing fabric)

Wood screws

Corner brackets: Optional, for added stability.

Staples

Mounting hardware: French cleat recommended for secure and flush mounting

Stick-on foam bumpers: Optional, to prevent tilting/ vibrations

Tools

A speed square can help you achieve square corners quickly and easily.

Tape measure

Speed square

Pencil

Saw (jigsaw or circular saw)

Drill

Screwdriver

Fabric scissors

Staple gun (electric recommended)

Level

Safety equipment (gloves, mask, and goggles)

Planning and Measurements

Decide on the placement of your absorbers for optimal sound absorption. Prioritize key areas such as first reflection points, corners, and back walls. Consulting with acoustic treatment experts like GIK Acoustics, ATS Acoustics, or Acoustimac can provide valuable insights, as well as treatment options or mounting hardware that may be more difficult to DIY or source elsewhere.

Determine the size and quantity of absorbers needed based on your room's dimensions and acoustic requirements. For more information on this, check out my previous entries on mix room calibration part one and part two. Effective broadband absorbers typically combine a depth of Rockwool with an air gap to target specific frequencies, enhancing their efficiency. Utilize the "Product Data Sheet" or "Technical Data Sheet" for selecting your batting.

This document typically contains detailed information about the product's acoustic properties, such as its sound absorption coefficient, density, thickness, and other relevant specifications. You can usually find this sheet on the manufacturer's website or by contacting them directly.

Selecting Fabric

Burlap covered panels tend to look sloppy no matter how careful you are.

Choose a breathable AT fabric that allows sound to pass through, such as Guilford of Maine, which is specifically designed for acoustic treatments. When making your selection, look for the "Acoustic Performance Data Sheet" or "Acoustic Transparency Data Sheet." This document provides information on the fabric's acoustic properties, including its ability to allow sound to pass through while still providing adequate coverage for acoustic treatments. Alternatives like burlap or other heavy fabrics do not have this data and can often impair acoustic performance and durability. These fabrics will also not be as easy to tension correctly, leading to visual flaws.

Calculate the amount of fabric needed based on your panel dimensions and the width of the fabric roll. You may find certain orientations lead to less waste or fewer cuts.

In my case it was optimal to lay the long dimension of the panel across the width of the roll, where the only waste was at the top and bottom of the panel and could be left on the back without the need of additional cuts as this small bit of extra fabric would not be seen when mounted.

Cutting the Frame

Measure and mark the 4x1 lumber to match the internal dimensions of the Rockwool panels.

Arrange the cuts to minimize waste and avoid knots, which can weaken the structure.

Cut the lumber to size, ensuring precise measurements for a snug fit around the Rockwool without compressing the batting, as this will compromise the performance of the material.

In my case I needed 3 lengths of cuts: the long sides (2 per panel), the short sides (2 per panel), and the center brace (one per panel), which is shorter so that it may fit between the long sides and lays flush to the exterior edge of the frame.

Assembling the Frame

Align the corners of the cut lumber, using a speed square to ensure right angles.

You may wish to sand out any rough or uneven edges, especially if using alternative fabrics.

Drill pilot holes to prevent the wood from splitting, and join the pieces using screws—two per corner—leaving enough space along the edges for the staples.

For additional stability, consider reinforcing with metal corner brackets or scrap wood cut to triangles, the latter of which can help keep the batting in place.

Attach AT backing fabric (e.g., tulle) to the interior of the frame with staples to hold the Rockwool in place.

Preparing the Fabric for Rockwool

Cut the fabric to size, allowing some extra material for overlap—you want to avoid staples right on the edge of the fabric.

Lay the fabric flat, exterior side down.

At this point you may wish to apply a spray glue to the fabric where the batting will be placed, however with a wood frame design this shouldn't be necessary.

Handle the Rockwool carefully, wearing gloves and a mask to avoid skin irritation and inhalation of fibers.

Lay the Rockwool flat in the center of the fabric.

Attaching and Wrapping the Frame

Place the frame onto the Rockwool, with the backing side up, centered on the fabric.

Ensure the Rockwool is evenly distributed within the frame and is not being compressed or bunched up anywhere.

Begin stapling the fabric on each side of the center bracing, pulling it taut without overstretching.

Continue stapling evenly around the frame, spacing the staples about one staple width apart for even tension (electric staple gun highly recommended).

Work from the center outwards to maintain even tension and avoid waves or distortions in the fabric.

Begin by going from middle to right on one side, switching sides and going the full width from left to right, and finish with the first side going from the middle to the left end, ensuring it is even and taut as you go.

Fold and secure the corners neatly, tucking any excess fabric underneath for a clean finish. Ensure the corners are flat and uniform for the best appearance.

Uneven tension will be visible as waves or distortions in fabric thread/ texture/ design, but this should not have much effect on the acoustic performance of the panel. An even wrap is easier to accomplish with something purpose made such as Guilford of Maine than with alternatives like burlap.

Mounting the Absorbers

Install the mounting hardware, such as French cleats, on the wall and the back of the absorbers.

You will want a secure mounting option as the panels will typically exceed 5lbs, largely due to the weight of the frame.

Use a level and tape measure to ensure accurate placement.

Attach stick-on foam bumpers to the rear side of the corners of the panels to prevent tilting and vibrations, ensuring a secure and stable installation.

Conclusion

In the field of sound design, a well-treated space is crucial for achieving professional-grade results. Broadband absorbers crafted with Rockwool offer a rewarding DIY solution, enhancing your acoustic environment while providing customization and significant cost savings.

With careful planning and execution, your DIY broadband absorbers will transform your studio into a professional-grade acoustic space. Remember, a well-treated space not only improves sound quality but also fosters creativity and productivity in your work. Let your creativity shine through each step of the process, and enjoy the enhanced sound quality that your custom broadband absorbers bring.

Ready to elevate your project with expert sound design? Let's bring your vision to life! Explore my services or contact me today to discuss your project requirements. Thank you for visiting sameliaaudio.com. Stay tuned for more insights and analysis on cinematic storytelling through sound.

IMPULSE RESPONSES: A VERSATILE TOOL FOR THE SOUND DESIGNER'S TOOLBOX

Learn how IRs are a vital tool in audio engineering and sound design. From capturing realistic reverberations and crafting innovative effects, to improving workflows and other practical applications, IRs empower artists to push the boundaries of creativity.

In the realm of audio engineering and sound design, believability and authenticity are often paramount, and Impulse Responses (IRs) are a great means for achieving these ends. In this article, we'll explore the essence of impulse responses, examining what they are, their place in audio engineering and acoustics, as well as why they're so useful for crafting immersive soundscapes.

Definition of Impulse Responses (IRs)

At its core, an impulse response is a representation of a system's behavior when subjected to an impulse- a sharp and short burst of sound which contains all frequencies. In practical terms, an impulse is often represented as a theoretical idealization, such as a Dirac delta function, which has infinite amplitude and infinitesimal duration. Put simply, it's a snapshot of how a space, a piece of equipment, or an effect responds to an input. This snapshot not only captures the immediate reverberation but also the intricate nuances of reflections, echoes, and resonances that define a sound environment.

Impulses can also be used as a test signal to measure the response of a system, such as a room or a piece of audio equipment. By analyzing the response of the system to the impulse, valuable information about its characteristics, such as reverberation time, frequency response, and phase, can be obtained.

Overall, in acoustics, an impulse serves as a fundamental tool for understanding and characterizing the behavior of acoustic systems and for various applications in audio engineering, sound design, room acoustics, and signal processing.

Importance of IRs in Audio Engineering and Sound Design

The significance of IRs cannot be overstated. They serve as fundamental building blocks for creating realistic reverberations, emulating the sonic characteristics of different spaces or hardware, and sculpting creative effects that push the boundaries of imagination. Whether it's recreating the ambience of a concert hall, capturing the warmth of a vintage guitar amplifier, or conjuring otherworldly soundscapes, IRs provide the essential tools for sonic artists to paint with precision and depth.

Summary

In this article, we navigate the creation and uses of impulse responses by:

Exploring their many uses in audio production

Contrasting the linearity of IR reverbs with the nonlinearity of algorithmic reverbs

Uncovering the art and science behind crafting authentic IRs

Additionally, we cover their practical applications within room acoustics and how inverted impulse responses can be used for room correction, as well as showcase popular software solutions that harness the power of IRs for audio enhancement.

Join me as we unravel the mysteries of impulse responses, unlocking new dimensions of sonic creativity and fidelity.

Uses for Impulse Responses

Impulse responses (IRs) are versatile tools with a wide array of applications in audio engineering and sound design. Let's explore some of the primary uses of impulse responses:

Reverbs of Spaces or Hardware

One of the most common applications of IRs is in recreating the reverberation characteristics of physical spaces or hardware units. Because we can capture the unique sonic fingerprint of a room, hall, or reverb unit using an IR, this gives us the ability to emulate specific acoustic environments, such as remote or unique locations on a film set, or hardware reverb units we may not always be able to cart around with us, such as a full sized plate reverb, adding depth and dimension to audio recordings that precisely match our other recordings.

Speaker Responses

A real bullhorn would break up and distort. Use distortion in front of your bullhorn IR for a more realistic effect.

IRs are also instrumental in capturing the sound of microphone placements on guitar amplifiers and other speakers. By sampling the interaction between a microphone and a speaker cabinet, IRs enable precise emulation of different microphone positions and types. In music, this can facilitate the exploration of authentic guitar tones without the need for extensive mic setup and experimentation.

In sound design and post production, this technique allows for realistic worldization that matches the rest of our audio. For example, we can take a line of dialogue or some diegetic music that is meant to be played from a speaker on screen and apply an impulse response of a matching radio, then run that processed signal through our chosen room reverb (ideally one that was taken on location in that same space) to realistically place it in our space seamlessly with the other elements.

It is worth noting that impulse responses are linear and do not account for speaker breakup or other forms of nonlinearities, such as distortion- more on that later.

Creative Effects

Beyond traditional reverb applications, IRs can be employed to create a variety of creative effects. Most common impulse loaders allow any WAV file to be run through them (often with some caveats, such as file length), resulting in unique timbral transformations and spatial effects. Try loading one shot samples of various instruments for a unique spin on a more traditional reverb, or experiment with musical loops for strange rhythmic delays.

By harnessing the power of impulse responses, audio engineers and sound designers can enhance their productions with lifelike reverberation, authentic speaker tones, and innovative sound effects. IRs remain indispensable tools in the modern audio toolbox, enabling artists to explore new sonic territories and push the boundaries of creativity.

Linearity in Impulse Responses vs Non-Linearity In Algorithmic Reverbs

There are two primary methods that stand out for creating reverberation effects: impulse responses and algorithmic reverbs. Both algorithmic reverbs and IRs serve as invaluable tools, allowing for the recreation of acoustic environments and the emulation of various audio hardware. However, it's essential to understand their strengths and weaknesses.

Cannot Capture Nonlinear Systems with IRs

One fundamental limitation of impulse responses is their inability to capture nonlinear systems such as compression or distortion accurately. While IRs excel at capturing the linear response of a system, they fall short when it comes to representing nonlinear behaviors. As a result, complex effects like dynamic range compression or harmonic distortion cannot be reproduced using standard impulse responses alone, and additional processing may be needed in conjunction with their use for more believable results.

Heuristic Differentiation Of IRs From Algorithmic Reverbs

Impulse responses are static representations of real acoustic spaces or hardware units. They capture a snapshot of the reverberation characteristics at a specific moment in time. In contrast, algorithmic reverbs are dynamic in nature, using mathematical algorithms to simulate reverberation based on adjustable parameters such as room size, decay time, and diffusion, allowing you freedom to sculpt your space in a way that individual IR reverbs generally can’t.

A practical way of understanding the differences between IR reverbs and algorithmic reverbs, such as the beautiful and realistic algorithmic offerings from Exponential Audio, is the differences in behavior that each exhibits when played alongside a printed and polarity inverted copy. It is worth noting that even some IR reverb plugins may include non-linear processing features, such as chorusing or saturation, that will not fully null similarly to purely algorithmic reverbs.

Here is an example of the two different reverbs, applied to a recording of hands clapping:

When an IR reverb's output is played alongside its printed and its polarity inverted copy, the two signals cancel each other out completely due to IR reverbs being linear and time-invariant. This is not the case with algorithmic reverbs. Listen to the differences from the examples below. Though faint, you will still be able to hear an output from the algorithmic reverb when the copy is inverted, as the variations introduced by the algorithm used will not fully null when summed.

Workflow Implications

While algorithmic reverbs offer a great degree of flexibility, they come with their own set of workflow implications. First, they may require more pre- and post- roll when punching in changes, taking more time and increasing session file size. And second, unlike static IRs, algorithmic reverbs require additional processing power to generate reverberation in real-time. Since IR-based processing tends to be much more computationally efficient compared to algorithmic processing, understanding when to take advantage of this can enhance CPU efficiency in audio production workflows and allow your sessions to operate more smoothly, especially as track counts increase and processing power is at a premium.

Crafting Impulse Responses: Multiple Paths to Authenticity

Crafting impulse responses is both an art and a science, offering multiple methods to capture the sonic characteristics of real-world spaces, micing distances, and hardware. Logic’s Space Designer and Impulse Response Utility are great tools for creating your own impulse responses and provide excellent documentation to guide you through their creation. Let's explore the common techniques for creating IRs and their respective pros and cons:

1. Convolution (Sine Sweep) Method:

This method involves playing a 20Hz-20kHz sine sweep through a system or space and recording the resulting impulse response. The recorded sweep is then deconvolved using a mathematical process to superimpose all of the times and levels of these recorded differences from the source sweep to the beginning of the new IR file, which resembles a short blip.

Pros: Offers precise control over the frequency range and amplitude of the impulse, resulting in accurate IRs. Can be particularly effective for capturing linear systems.

Cons: Requires more equipment and setup, and the deconvolution process can be time-consuming. You are also limited to the frequency range and timbrel balance of your loudspeaker for recording IRs of spaces.

Tips: Ensure accurate playback and recording equipment, minimize background noise during recording, and use high-quality microphones for optimal results. Keep your recorded sweeps aligned with and the same length as the source sweep.

2. Starter Pistol (or Clapper) Method:

Be sure to use a method capable of generating low frequencies- a hand clap may not be the best choice!

A starter pistol or clapper is used to create a sharp impulse in a space or through a hardware unit. The resulting sound is recorded and trimmed so that any space before and after the impulse of the pistol/ clapper is removed. The resulting file is used as an IR.

Pros: Simple and straightforward method requiring minimal equipment. Can capture the acoustic characteristics of a space or hardware unit effectively.

Cons: May lack precision compared to the sine sweep method, particularly in capturing low-frequency information. The recordings require careful attention during further editing to be used as an IR.

Tips: Pay attention to the timing and placement of the impulse source for precise results, and consider using multiple recordings from different positions for a comprehensive capture of the space or equipment's characteristics.

By understanding the strengths and limitations of each method, audio engineers and sound designers can choose the most appropriate approach for capturing impulse responses that accurately represent the desired sonic characteristics. Whether using the precision of the convolution method or the simplicity of the starter pistol method, crafting IRs opens up a world of possibilities for creating immersive and authentic audio experiences.

Practical Applications: Harnessing Inverted Impulse Responses for Room Correction

Impulse responses can play a crucial role in system tuning and room correction, offering a way to correct both the frequency response and phase response. For more information on system tuning and room correction, you can check out my previous blog posts on the subject in Understanding Mix Room Calibration: Part 1 and Part 2. Here's a brief overview of how inverted impulse responses are utilized for this purpose.

Using IRs for Room Correction

Room correction involves measuring the acoustic properties of a listening space and applying corrective measures to compensate for anomalies in the systems response. Inverted IRs can be employed as a way to mitigate the effects of room reflections and resonances in ways that simple EQ adjustments cannot, however the technique is not without its pitfalls.

Ø (the greek letter “Phi”) is commonly used to denote the polarity inversion control on EQs or consoles. Commonly mis-referenced as the “phase invert” button, the “polarity invert” button merely swaps the positive and negative of the waveform without affecting the timing, whereas “phase invert” implies a shift of timing.

Volume Inversion

By inverting the amplitude of an impulse response and applying it to an audio signal, room correction systems can effectively cancel out the reflections caused by room boundaries. This helps to equalize the sound, resulting in improved clarity and detail in audio reproduction.

Phase Inversion

In addition to volume inversion, phase inversion techniques can be used to address phase discrepancies introduced by room reflections. Phase inversion may cause audible pre-ringing artifacts unless your fit & inversion is greater than, say, 60db, or you use a program such as FIR Designer, which lets you select which filters are linear phase and minimum phase, allowing you to reduce pre-ringing artifacts by using minimum phase in the low end where pre-ringing is most noticeable.

Showcase of Software Solutions for Room Correction Utilizing IRs

Several software solutions are available for room correction that utilize inverted impulse responses to correct room acoustics:

Sonarworks Reference: Sonarworks Reference offers a user-friendly interface for measuring room responses and generating corrective filters based on inverted impulse responses. It provides real-time monitoring and correction for headphones and speakers, allowing users to achieve a more accurate and consistent listening experience.

Dirac Live: Dirac Live utilizes advanced room correction algorithms to optimize the sound reproduction in listening environments. It measures room acoustics with precision and generates correction filters based on inverted impulse responses, resulting in improved clarity, imaging, and tonal balance.

Room EQ Wizard (REW): Room EQ Wizard is a powerful room measurement and correction tool that supports a wide range of measurement techniques and analysis tools. It allows users to measure room responses, generate corrective filters, and visualize room acoustics using inverted impulse responses and other measurement data.

By utilizing these software solutions, audio enthusiasts can effectively harness inverted impulse responses for room correction, achieving more accurate and faithful sound reproduction in their listening spaces.

Conclusion

Impulse responses are a crucial part of the modern audio engineer and sound designers toolbox, offering a versatile option for capturing, recreating, and manipulating the sonic characteristics of real-world spaces and audio hardware. Throughout this exploration, we've covered the many uses of impulse responses, uncovering their importance to sound design as well as practical applications in audio reproduction.

From faithfully recreating the reverberation of real spaces to creating experimental effects, IRs provide unparalleled flexibility and precision in shaping soundscapes. Their ability to capture the intricate details of acoustic environments and hardware units enables audio engineers and sound designers to achieve immersive and authentic audio experiences.